PRESS RELEASE

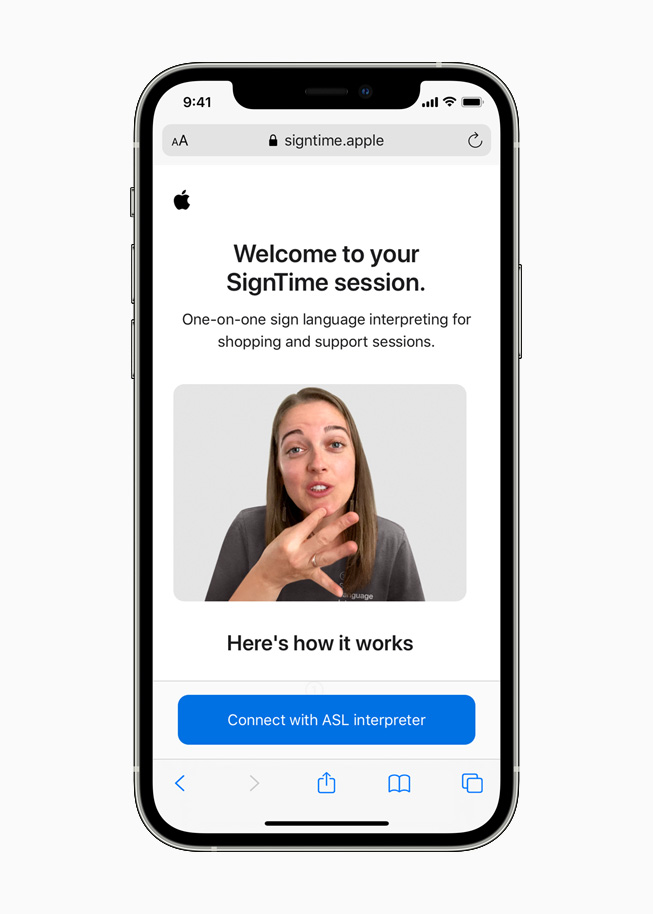

New SignTime service launches Thursday, May 20, to connect Apple Store and Apple Support customers with on-demand sign language interpreters

Customers visiting Apple Store locations in the US, UK, and France can use SignTime to remotely access a sign language interpreter.

Cupertino, California Apple today announced powerful software features designed for people with mobility, vision, hearing, and cognitive disabilities. These next-generation technologies showcase Apple’s belief that accessibility is a human right and advance the company’s long history of delivering industry-leading features that make Apple products customizable for all users.

Later this year, with software updates across all of Apple’s operating systems, people with limb differences will be able to navigate Apple Watch using AssistiveTouch; iPad will support third-party eye-tracking hardware for easier control; and for blind and low vision communities, Apple’s industry-leading VoiceOver screen reader will get even smarter using on-device intelligence to explore objects within images. In support of neurodiversity, Apple is introducing new background sounds to help minimize distractions, and for those who are deaf or hard of hearing, Made for iPhone (MFi) will soon support new bi-directional hearing aids.

Apple is also launching a new service on Thursday, May 20, called SignTime. This enables customers to communicate with AppleCare and Retail Customer Care by using American Sign Language (ASL) in the US, British Sign Language (BSL) in the UK, or French Sign Language (LSF) in France, right in their web browsers. Customers visiting Apple Store locations can also use SignTime to remotely access a sign language interpreter without booking ahead of time. SignTime will initially launch in the US, UK, and France, with plans to expand to additional countries in the future. For more information, visit apple.com/contact.

“At Apple, we’ve long felt that the world’s best technology should respond to everyone’s needs, and our teams work relentlessly to build accessibility into everything we make,” said Sarah Herrlinger, Apple’s senior director of Global Accessibility Policy and Initiatives. “With these new features, we’re pushing the boundaries of innovation with next-generation technologies that bring the fun and function of Apple technology to even more people — and we can’t wait to share them with our users.”

AssistiveTouch for Apple Watch

To support users with limited mobility, Apple is introducing a revolutionary new accessibility feature for Apple Watch. AssistiveTouch for watchOS allows users with upper body limb differences to enjoy the benefits of Apple Watch without ever having to touch the display or controls. Using built-in motion sensors like the gyroscope and accelerometer, along with the optical heart rate sensor and on-device machine learning, Apple Watch can detect subtle differences in muscle movement and tendon activity, which lets users navigate a cursor on the display through a series of hand gestures, like a pinch or a clench. AssistiveTouch on Apple Watch enables customers who have limb differences to more easily answer incoming calls, control an onscreen motion pointer, and access Notification Center, Control Center, and more.

AssistiveTouch allows users with upper body limb differences to enjoy the benefits of Apple Watch without ever having to touch the display or controls.

Eye-Tracking Support for iPad

iPadOS will support third-party eye-tracking devices, making it possible for people to control iPad using just their eyes. Later this year, compatible MFi devices will track where a person is looking onscreen and the pointer will move to follow the person’s gaze, while extended eye contact performs an action, like a tap.

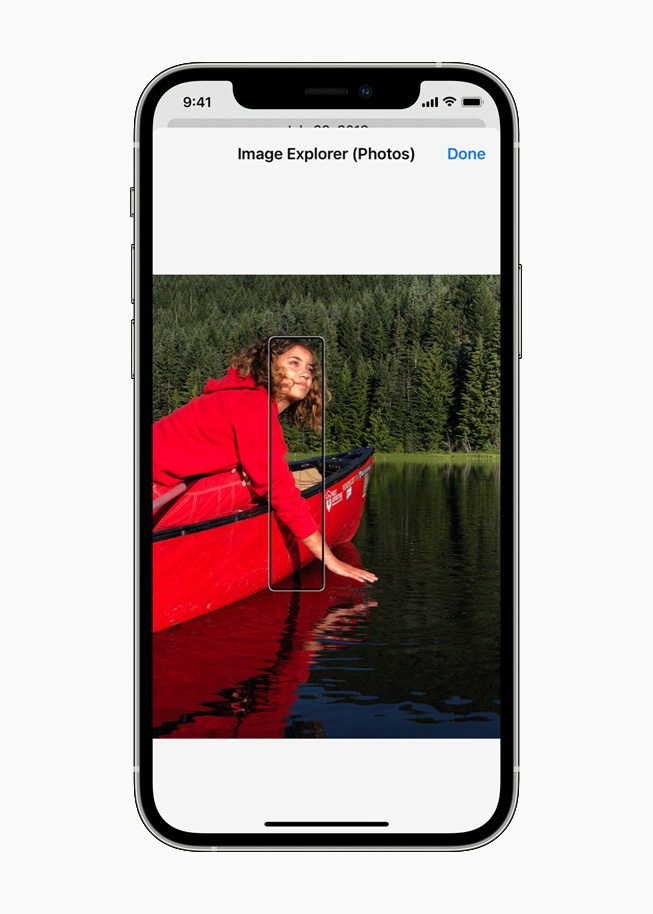

Explore Images with VoiceOver

Apple is introducing new features for VoiceOver, an industry‑leading screen reader for blind and low vision communities. Building on recent updates that brought Image Descriptions to VoiceOver, users can now explore even more details about the people, text, table data, and other objects within images. Users can navigate a photo of a receipt like a table: by row and column, complete with table headers. VoiceOver can also describe a person’s position along with other objects within images — so people can relive memories in detail, and with Markup, users can add their own image descriptions to personalize family photos.

With VoiceOver, users can explore more details about an image with descriptions, such as “Slight right profile of a person’s face with curly brown hair smiling.”

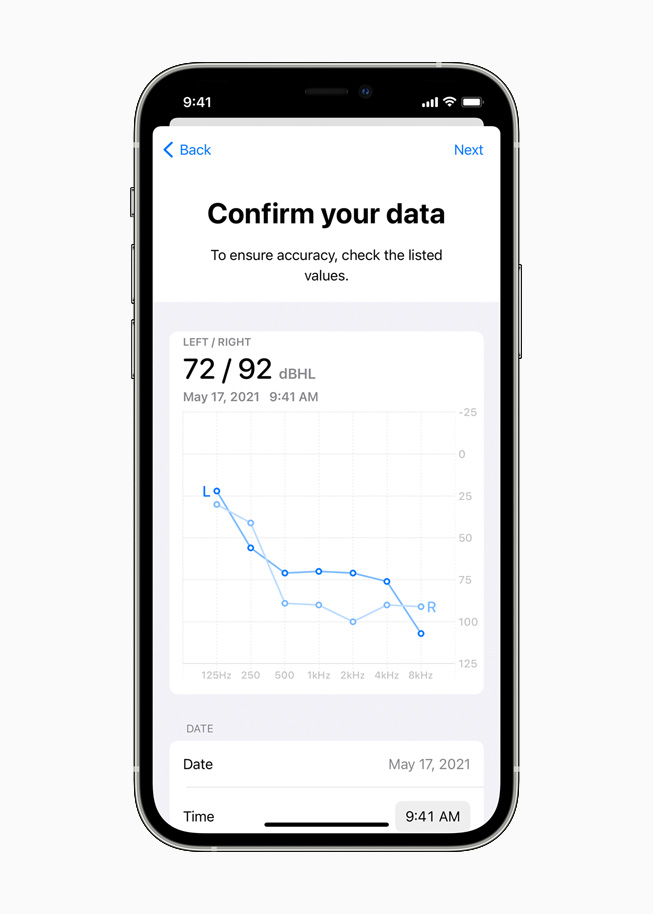

Made for iPhone Hearing Aids and Audiogram Support

In a significant update to the MFi hearing devices program, Apple is adding support for new bi-directional hearing aids. The microphones in these new hearing aids enable those who are deaf or hard of hearing to have hands-free phone and FaceTime conversations. The next-generation models from MFi partners will be available later this year.

Apple is also bringing support for recognizing audiograms — charts that show the results of a hearing test — to Headphone Accommodations. Users can quickly customize their audio with their latest hearing test results imported from a paper or PDF audiogram. Headphone Accommodations amplify soft sounds and adjust certain frequencies to suit a user’s hearing.

Headphone Accommodations will recognize audiograms imported from a paper or PDF and display the results in a chart.

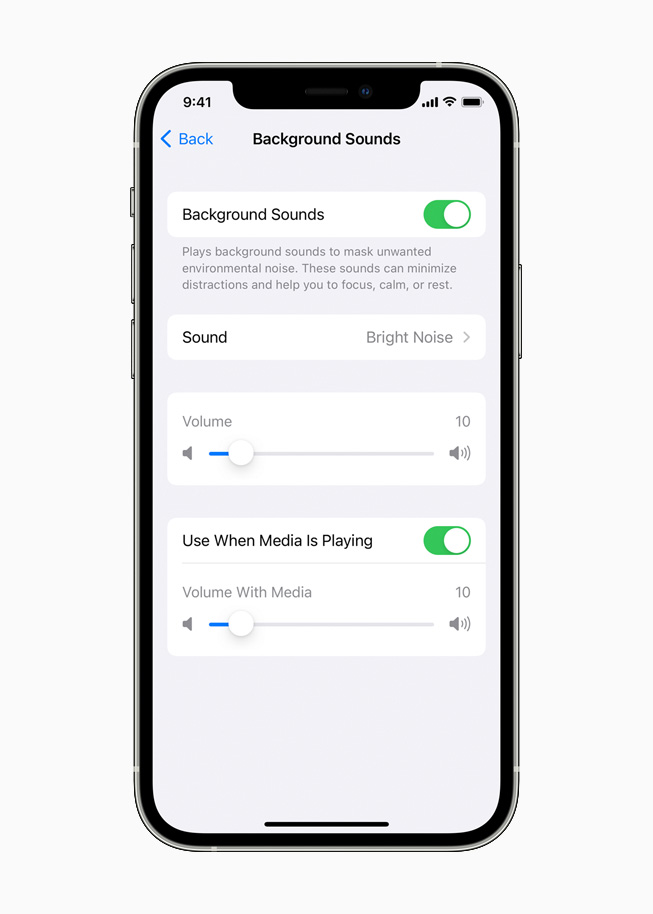

Background Sounds

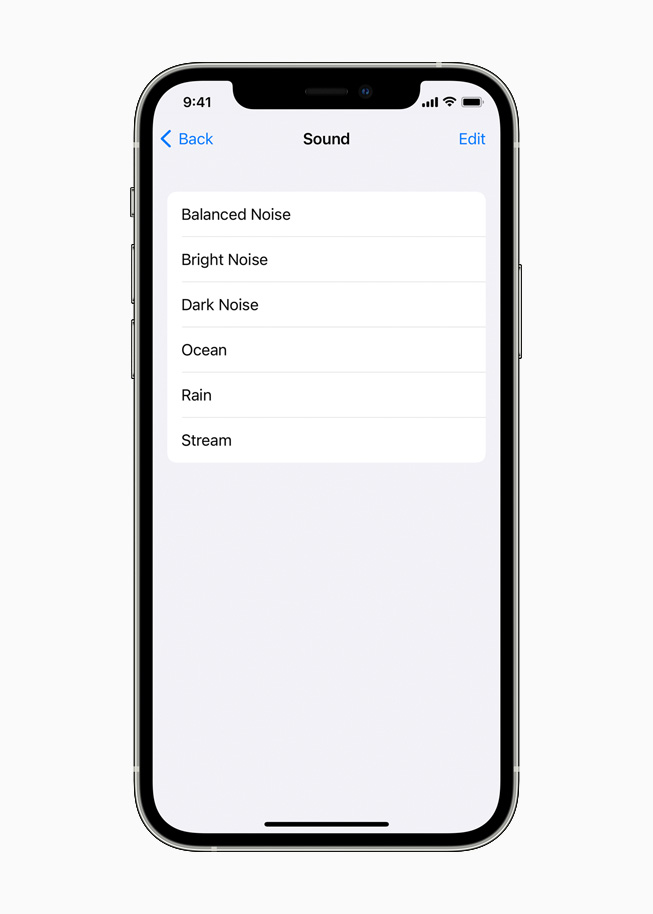

Everyday sounds can be distracting, discomforting, or overwhelming, and in support of neurodiversity, Apple is introducing new background sounds to help minimize distractions and help users focus, stay calm, or rest. Balanced, bright, or dark noise, as well as ocean, rain, or stream sounds continuously play in the background to mask unwanted environmental or external noise, and the sounds mix into or duck under other audio and system sounds.

Background sounds, such as ocean, rain, and stream sounds, help minimize distractions and help users focus, stay calm, or rest.

Background sounds, such as ocean, rain, and stream sounds, help minimize distractions and help users focus, stay calm, or rest.

Additional features coming later this year include:

- Sound Actions for Switch Control replaces physical buttons and switches with mouth sounds — such as a click, pop, or “ee” sound — for users who are non-speaking and have limited mobility.

- Display and Text Size settings can be customized in each app for users with colorblindness or other vision challenges to make the screen easier to see. Users will be able to customize these settings on an app-by-app basis for all supported apps.

- New Memoji customizations better represent users with oxygen tubes, cochlear implants, and a soft helmet for headwear.

New Memoji customizations better represent users with oxygen tubes, cochlear implants, and a soft helmet for headwear.

In celebration of Global Accessibility Awareness Day, Apple is also launching new features, sessions, curated collections, and more:

- This week in Apple Fitness+, trainer and award-winning adaptive athlete Amir Ekbatani talks about Apple’s commitment to making Fitness+ as accessible and inclusive as possible. Fitness+ features workouts inviting to all, from trainers using sign language in each workout to say “Welcome” or “Great job!,” to “Time to Walk” episodes changing to “Time to Walk or Push” for wheelchair workouts on Apple Watch, and all videos include closed captioning. Fitness+ also includes a trainer demonstrating modifications in each workout, so users at all levels can join in.

Closed captioning for every workout is one of the ways Apple is making Fitness+ inclusive and welcoming to all users.

- The Shortcuts for Accessibility Gallery provides useful Siri Shortcuts for tracking medications and supporting daily routines, and a new Accessibility Assistant Shortcut helps people discover Apple’s built-in features and resources for personalizing them.

- Today at Apple is offering live, virtual sessions in ASL and BSL throughout the day on May 20 that teach the basics of iPhone and iPad for people with disabilities. In some regions, Today at Apple will offer increased availability of Accessibility sessions in stores, through May 30.

- In the App Store, customers can read stories about Lucy Edwards, an influencer on TikTok who is blind and shares her favorite accessible apps; App of the Day FiLMiC Pro, which is among the most accessible video apps for blind and low vision filmmakers; and more in the new Express Yourself Your Way collection.

- The Apple TV app will spotlight its Barrier-Breaking Characters collection which celebrates authentic disability representation onscreen and behind the camera. It features guest curation from creators and artists like the cast of “Best Summer Ever,” who share their favorite movies and shows in an editorial experience designed by American Pop-Op and Urban Folk artist Tennessee Loveless, known for his vibrant illustrations and colorful storytelling told through the lens of his colorblindness.

- Apple Books adds reading recommendations from author and disability rights activist Judith Heumann, along with other themed collections.

- Apple Maps features new guides from Gallaudet University, the world’s premier university for Deaf, hard of hearing, and Deafblind students, that help connect users to businesses and organizations that value, embrace, and prioritize the Deaf community and signed languages.

Images of Apple Accessibility Features

Press Contacts

[ comments ]